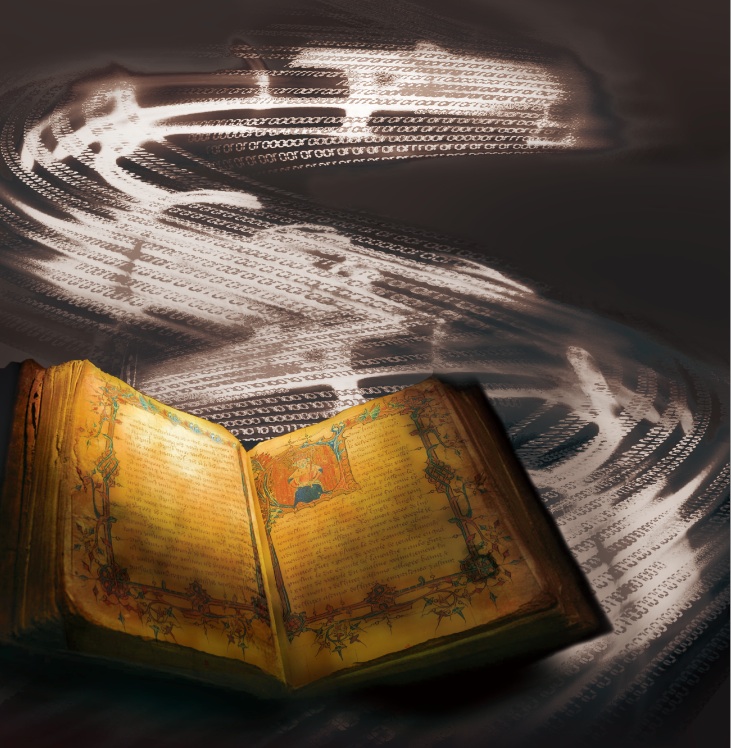

Reading a medieval manuscript is like getting a glimpse at another reality. Like a window opening onto another life, these words written centuries ago teleport the reader into the past. But merely looking at words on a page would barely scratch the surface of all there is to learn from a medieval manuscript. How did the scribe write? What inks were used? What is in the foreground, versus the background? What makes studying these texts especially challenging is the fact that worn and aged manuscripts are extremely delicate.

Bridging the gap between past and present, however, is a thoroughly modern field: computer science. Now, by merely opening another sort of window — a web browser — you can access millions of digitized images of manuscripts. Advances in machine learning have allowed computers to move beyond simply presenting images of texts to quite literally reading them. With a tool like optical character recognition, a computer program can identify text within images.

Still, computers are no medievalists. Medieval manuscripts pose a particular problem for computer-assisted research — the handwriting style and state of preservation of the text both limit the accuracy of optical character recognition. In addition, recording the material properties of a medieval manuscript is incredibly time-consuming. The materiality of manuscripts may obscure text over time, but it also betrays the secrets of books: how they were made, and by whom. Scientists and historians alike are thus interested in discerning material properties of old texts, and they need efficient, non-invasive techniques that can handle the sheer size of the medieval corpus.

To this end, Yale researchers have developed an algorithm capable of sifting through thousands of images to discern how many inks were used in the scribing of each and every page of a manuscript. Led by Yale professor of computer science Holly Rushmeier, this project is one component of an interdisciplinary collaboration with Stanford University, known as Digitally Enabled Scholarship with Medieval Manuscripts. This algorithm in particular is driven by the fundamental principle of clustering, which groups pixels into specific categories. It gets at the number of inks used in individual manuscripts, but also offers efficiency in analyzing large databases of images with quickness and accuracy. While there are other computer platforms relevant to the topic of medieval manuscripts, most focus on simple methods such as word spotting and few can efficiently capture material properties.

The question might seem simple on its surface — how many colors did this scribe use thousands of years ago — but the answer is quite telling. A better understanding of what went into the creation of medieval manuscripts can reveal new details about cultures and societies of the past. Rushmeier’s research takes a technical approach to history, using computer science and mathematical formulas to reach conclusions about medieval texts. She hopes her findings will aid both scientific and historical scholarship in years to come. Her work stands at the intersection of science and history, and tells a compelling story about texts of the past and programs of the future.

Uncovering a manuscript’s true colors

Scholars have long been interested in the colors used in medieval manuscripts. In the past, researchers discerned variations in the colors used in individual and small groups of pages. But for an entire manuscript, or in large comparative studies, quantifying color usage by visual inspection is not feasible. Computers, on the other hand, can wade through thousands of images without tiring.

Computers can assign values to different colors, and can then group similar colors into clusters. The K value, or number of distinct inks on a page, can then be determined. For many years, scientists have been able to manually count independent inks to obtain approximate K values. In contrast, the algorithm developed by Rushmeier’s team is an automatic method of estimating K, which is more efficient than prior eyeballing methods.

The computer scientists clustered three types of pixels: decorations, background pixels, and foreground pixels. Decorations included foreground pixels that were not specifically part of text, while foreground pixels referred to words written on the page. To test the quality of their clustering method, namely its accuracy in determining the number of inks used per manuscript, the researchers practiced on 2,198 images of manuscript pages from the Institute for the Preservation of Cultural Heritage at Yale.

To evaluate accuracy, the researchers compared K values produced by the algorithm to K values obtained manually. In an analysis of 1,027 RGB images of medieval manuscripts, which have red, green, and blue color channels, 70 percent of the initial K values output by the computer matched the number of inks counted manually. When the value of K was updated after checking for potential errors, the algorithm’s value either matched the value determined by eye or deviated by only one color 89 percent of the time. The scientists were pleased to see such high accuracy in their algorithm, and also realized the important of updating K to produce results closer to reality.

Checking for errors is necessary because even computers make mistakes, and finding the K value for a medieval manuscript page is no small feat. For one, even a single ink color can display a tremendous amount of variation. The degradation of organic compounds in the ink causes variations in the intensity of pigment to multiply over time. Even at the time of writing, the scribe could have applied different densities of ink to the page. “There’s the potential for seeing differences that could just be from using a different bottle of the same ink,” Rushmeier said.

A computer runs the risk of overestimating the number of distinct color groups on a page. Without a proper check, Rushmeier’s algorithm would produce a K value higher than what is truly reflected in the manuscript. Natural variations in pigment color should not be construed as separate inks.

What constitutes a cluster?

Medieval manuscripts have a high proportion of background and foreground text pixels relative to decorations. Before the computer carried out clustering, only the non-text, foreground pixels were isolated. Differentiating between foreground and background pixels required a technique called image binarization. This was the crucial first step in designing an algorithm to calculate a K value, according to postdoctoral associate Ying Yang, who worked on the project.

The color image of the manuscript page was converted into a gray scale that had 256 different color intensities. The number of pixels for each of the intensities was sorted into a distribution, and pixel values within the peak of the distribution were deemed to be foreground, while the rest were labeled as background noise. In the resulting binary image, foreground pixels were assigned a zero, while background pixels were assigned a one.

After the foreground had been differentiated from the background, text had to be separated from non-text pixels. Incidentally, the handwriting in medieval manuscripts lends itself to this task. Yang noted that in medieval Western Europe, text was written in straight bars. “It’s as if they deliberately tried to make each letter look like every other letter,” Rushmeier said. Though this makes computer-assisted research more difficult in some respects, the team of Yale scientists used the similarity of text strokes to their advantage.

Since the bar-like writing technique of medieval scribes makes for fairly uniform letters, the scientists used a re-sizeable, rectangular template to match and identify each pen stroke. First, they gathered information about text height and width from the binary image. Once the size of the template had been established, it was used to match with text. Only strokes of a similar size to the rectangle were given high matching scores. Since ornately designed capital letters were not of a similar size compared to the rest of the text, they received low matching scores.

Pixels with low matching scores that were also valued at zero in the binary image were deemed to be foreground, non-text pixels that were candidates for clustering. Once the candidates were identified, they could finally be classified into clusters. Of course this method meant that high matching text was overlooked. The algorithm had a built-in remedy: the computer automatically added one to the total number of clusters derived from candidate pixels, which resulted in the initial value of K. This ensured that the text-cluster, itself representative of the primary ink used in writing the manuscript, was counted.

Of course this addition would have lead to an overestimation of the K value whenever any text pixels were erroneously considered candidates for clustering. The Yale team devised a clever solution to this problem. The scientists compared the color data for each of the K clusters with the color of the text. A striking similarity between one of these clusters and the text would indicate that the cluster was derived from misrouted text pixels.

The color of the text had yet to be determined. To obtain this piece, the team performed another round of clustering. This time, all foreground pixels — text and non-text — were deemed to be candidates. Given the large quantity of text pixels, the text-cluster was fairly easy to spot. While the only new information generated in this round of clustering was the pixel color values of the text-cluster, this detail was essential in ensuring an accurate count of inks used on a page.

Importantly, the computer algorithm had checks in place to add and subtract from the K value depending on risk of over or underestimation. It worked efficiently, but did not sacrifice thoroughness. In the end, the computer revealed a well-kept secret of the medieval manuscript by outputting an accurate value for K.

The bigger picture

The algorithm was used to analyze more than 2,000 manuscript images, including RGB images and multispectral images, which convey data beyond visible light in the electromagnetic spectrum. By calculating K more quickly, this program offers a more directed research experience. For example, scholars curious about decorative elements — say, elaborately designed initials and line fillers within a manuscript — can focus on pages with relatively high K values instead of spending copious amounts of time filtering through long lists of manuscripts. In general, once K has been determined, the non-text clusters can be used for further applications. In detecting features such as ornately drawn capital letters and line fillers, the team had 98.36 percent accuracy, which was an incredible, exciting result.

Though the team is nearing the end of current allotted funding, provided by the Mellon Foundation, Rushmeier said the group has more ideas regarding the impact K could have on scholarly research. For instance, with some modifications, the algorithm could reach beyond books and be repurposed for other heritage objects. According to Rushmeier, in exploring the material properties of medieval manuscripts with computer science, we have only “scratched the surface.”

About the Author: A junior in Berkeley College, Amanda Buckingham is double majoring in Molecular Biology and English. She studies CRISPR/Cas9 at the Yale Center for Molecular Discovery and oversees stockholdings in the healthcare sector for Smart Woman Securities’ Investment Board. She also manages subscriptions for the Yale Scientific.

Acknowledgments: The author would like to thank Dr. Holly Rushmeier and Dr. Ying Yang for their enthusiastic and lucid discussion of a fascinating, interdisciplinary topic!

Further Reading: Yang, Ying, Ruggero Pintus, Enrico Gobbetti, and Holly Rushmeier. “Automated Color Clustering for Medieval Manuscript Analysis.” https://graphics.cs.yale.edu/site/sites/files/DH2015_ID193_final_1.pdf.

Cover image by Christina Zhang.